Visual effects commonly requires both the creation of realistic synthetic humans as well as retargeting actors’ performances to humanoid characters such as aliens and monsters. Achieving the expressive performances demanded in entertainment requires manipulating complex models with hundreds of parameters. Full creative control requires the freedom to make edits at any stage of the production, which prohibits the use of a fully automatic “black box” solution with uninterpretable parameters. On the other hand, producing realistic animation with these sophisticated models is difficult and laborious.

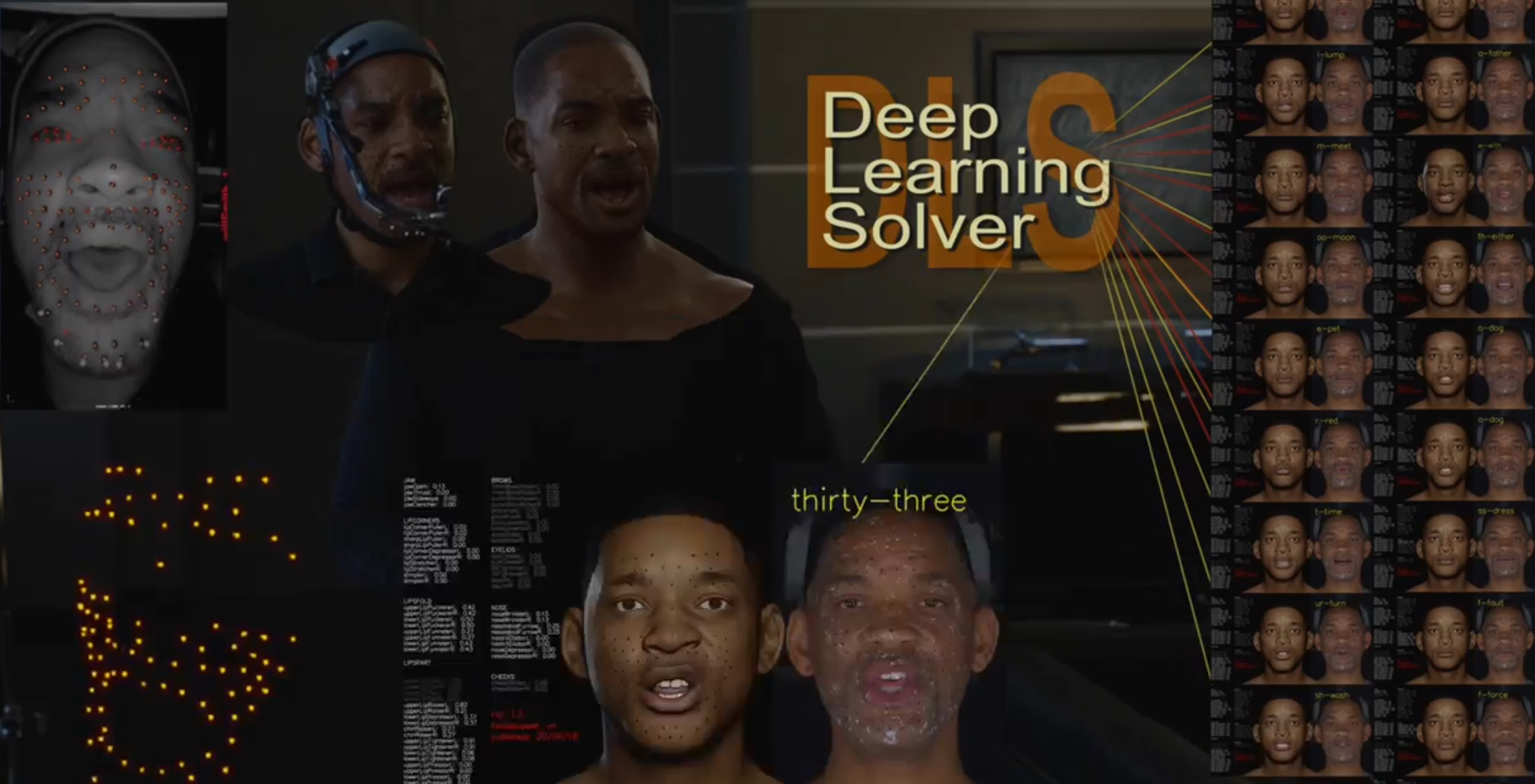

This paper describes FDLS (Facial Deep Learning Solver), which is Weta Digital’s solution to these challenges. FDLS adopts a coarse-to-fine and human-in-the-loop strategy, allowing a solved performance to be verified and (if needed) edited at several stages in the solving process. To train FDLS, we first transform the raw motion-captured data into robust graph features. The feature extraction algorithms were devised after carefully observing the artists’ interpretation of the 3d facial landmarks. Secondly, based on the observation that the artists typically finalize the jaw pass animation before proceeding to finer detail, we solve for the jaw motion first and predict fine expressions with region-based networks conditioned on the jaw position. Finally, artists can optionally invoke a non-linear finetuning process on top of the FDLS solution to follow the motion-captured markers as closely as possible. FDLS supports editing if needed to improve the results of the deep learning solution and it can handle small daily changes in the actor’s face shape.

FDLS permits reliable and extremely high-quality performance solv- ing with minimal training and little or no manual effort in many cases, while also allowing the solve to be guided and edited in unusual and difficult cases. The system has been under development for several years and has been used in major movies.

Paper in the ACM Digital Library